Complex technologies like computers are, well, complicated, incredibly complicated. Not even their designers can anticipate how they will behave in every possible situation, which is why there are beta testers and bug reports. Engineers build machines to perform specific tasks, but their ideas are necessarily rooted in past experience. So there is no way to predict how the devices might actually be used in the real world, and particularly, other uses and misuses that clever monkeys may find for the technology.

The history of the internet is full of examples. Take email, for instance, that marvelous method of fast, cheap connections which led to floods of annoying spam at the hands of greedy individuals and became further weaponized by even more evil hackers. Personalized search engines like Google were meant to make the entire body of online information instantly available for answering any question. However, by basing results largely on what the seeker had looked for previously, they help establish filter bubbles which tend to reinforce narrow opinions rather than challenge them. Ironically, these indexing tools may have led to more confusion and online discord than objective truth and consensus.

Even cellphones, which despite providing effortless communications, not to mention maps and other helpful information, have become a major cause of distracted driving and traffic accidents. And there are many other unintended consequences that can surprise developers and users alike.

Some derive from unforeseen flaws in technological implementation. One such is the “Scunthorpe problem“, named after an incident in 1996 when an obscenity filter at AOL gave a false alarm for the name of the town of Scunthorpe in England. It decided there was a forbidden word embedded in the name and blocked all email involving that location. There are many other such examples that can be found online based on unfortunate last names, domains, and even innocent academic awards like “magna cum laude“.

Others are grounded in human psychology such as the “Streisand effect” where an attempt to hide or censor information actually draws more attention to it. It’s named after the famous singer, who in 2003 tried to get pictures of her home off the internet, and thereby generated a great deal of widespread curiosity about them.

All these arose from single technological development or the perverse effects of humans manipulating them for their own gain. But even good intentions can rebound with bad effects that make the situation even worse, such as what sometimes happens when websites require strong passwords for users. Often users will simply write the passwords on a sticky note right on the computer..

But what can happen when such complex systems interact with each other? Like it or not, we’re just at the very beginning of the exciting process of finding out.

Right now, the biggest technological trend is the Internet of Things; hooking up devices to the internet. In the rush to be the first to market a product, the manufacturers tend to neglect security or fail to clearly think through the problem, as we’ve reported before.

We’re already seeing one result of such failures – hackers have managed to develop massive botnets, which have successfully (albeit temporarily) shut down major sites running critical internet services. There’s a certain irony in all these devices connected to make life easier being used to shut down the net, but that is the just one of the first major unforeseen bad results of the Internet of Things. With exploits like hackers have used security cameras to mine bitcoins, it surely won’t be the last.

The situation will eventually involve life or death issues – such as with driverless cars. It’s not just that cars can be hacked, too, but such vehicles must be able to make split-second moral decisions. One classic ethical dilemma problem is the “trolley problem“. If a smart vehicle has to choose between hitting a group of pedestrians or slam into a wall, killing the driver, what should it do? And to further complicate it: what if other driverless cars are involved in that same situation?

Others can be more amusing. Amazon’s voice-activated assistant, Echo, heard a TV report in various homes about a girl ordering a dollhouse, and a number of the devices thought it was directed at them, and tried to order the toys. People have also experimented with having Alexa, and the similar Google Home device sitting next to each other and talking. So far they just repeat in a seeming endless cycle but when someone set two Google Homes next to each other, the results were much more interesting. For nearly a week, they argued, joked, and even flirted.

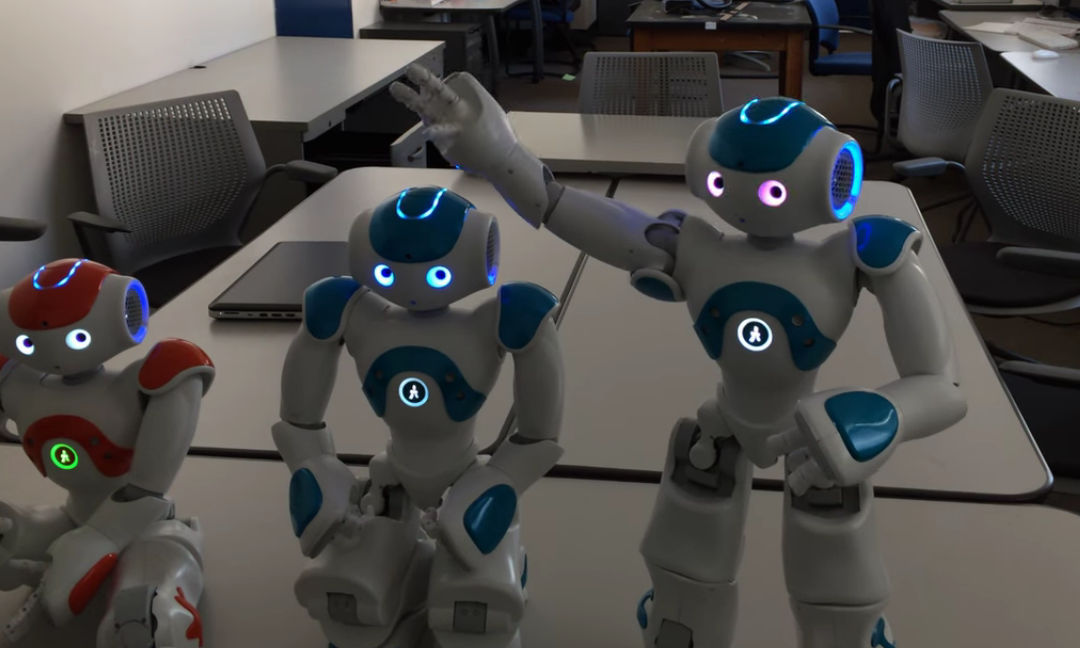

In another experiment, French researchers posed version of a classic puzzle called “the king’s wise men” where each one of three tiny robots were challenged to figure something out about themselves only by observing the others. A video captures the eerie moment where one displays a glimmer of self-awareness. Whether or not this is how Skynet begins, it shows that surprising results can, and likely will, happen.

Fortunately, sometimes there are unintended benefits. The Scania R 450 semi used in the Berlin Christmas market attack last month was stopped by an automatic braking system after hitting the first person. This is why there were only 11 people run over, unlike the similar attack last summer in Nice, France, where a terrorist-driven truck killed 86.

Someday with all these smart things running our lives, the Three Laws of Robotics may be necessary. But both innovators and ordinary users should always remember Murphy’s Law: Anything that can go wrong, will go wrong.