The internet has become an essential utility of the modern world – such a part of the functioning of our global society that the very name has lost its capitalization. Like other basic services such as electric power, telephone, water, and sewage, the internet’s real importance to those who use it is in what it can provide, rather than what it is.

Most users don’t care at all how the net works, only that it continues to do so. But whether driving a car or surfing the web, it pays to have a basic understanding of what’s going on under the hood. A little knowledge helps in diagnosing problems when things go wrong; knowing whether something that is easily fixed or needs the services of a professional can save much time and trouble.

Moreover, having an idea of how things work allows a user to get the more benefits from the technology and generally makes things run a little smoother. Therefore, here begins a short series of articles to provide a simple look at the wonderful workings of the World Wide Web behind the screen. If nothing else, this should give readers a few bits of interesting trivia.

The net and the web

The first thing to realize is that the web is not the same as the internet. The internet is primarily physical: it is the global network of computer networks that communicate with each other through a series of agreed-upon rules, or protocols. The web is just a fraction of the services that run on the planet-spanning system of networks. It is the electronic function of the internet that is made up of websites and webpages. But the net also handles email, file-moving systems, and various methods of doing things behind the scenes, some of which are highly technical, others that have become obsolete and more being added as needed.

Since the internet is defined by the rules it uses, the web can also described as anything using the special protocol invented just for viewing the World Wide Web in web browsers. This is HTTP, the HyperText Transfer Protocol, which moves and connects files written in HTML, the HyperText Mark-up Language.

Unlike much computer terminology, this particular mouthful is actually quite descriptive. “Hyper” comes from the Greek for “above” or “beyond”, in this case “text” or written words, the content of the page. It is called a “mark-up” as it inserts information between these words, like an editor or teacher might to correct a written essay. This mark-up is written in a “language”, an ordered set of rules that describes the nature or appearance of the text. Thus an HTML document, that is, a webpage, is one where the content has been marked up in a certain unseen code that tells it how it should look and act in a web browser.

HTML governs the basic display of verbal information, images, and data of webpages on screens. Therefore for convenience, the code itself is usually kept completely invisible to the reader. Other codes, invisibly hidden in or linked to HTML tags, determine the precise layout, look, and behavior of elements of the content, but they will be discussed another day.

What is important to note here is behavior. For with a single click, the specially-marked text and images in HTML can instantly summon other content from computers around the world, which is why the whole system is also proudly referred to as the “World Wide Web”.

These hyperlinks come in special tags surrounding words or images. They contain internet addresses to other pages or pictures. For the real power and purpose of HTML comes from its ability to connect with other HTML files and internet resources. These links are what make the web, and make it much more than the sum of its parts.

The web works because of its precision which is enabled by the Domain Name System. Domain names allow internet sites anywhere to be addressed in a short, user-friendly way, like www.swcp.com. Hyperlinks use these addresses to enable single words or images in one document to be associated with others in pages over a world away, and to retrieve that information quickly.

Where the web came from

Dreams of a global system of information retrieval, a “universal library”, long predate the internet and digital computers, at least since the mid-Nineteenth Century. Daily newspapers printing stories sent by electric telegraph from distant lands was the first hint of the marvels to come.

But all such early proposals, such as that of Paul Otlet, a Belgian who invented the Universal Decimal Classification system of indexing books and also card catalogs for libraries, depended entirely on human thought and labor. They could never achieve the necessary speed and flexibility to succeed. But for decades mechanical offices and other such devices remained popular features of the fabulous future promised on the funny pages of newspapers.

The first real glimmer of a personalized universal library came in 1945, barely a month before the first atom bomb was dropped. As We May Think first appeared in the July edition of The Atlantic magazine, written by Dr. Vannevar Bush, a brilliant engineer and administrator who oversaw the military’s scientific research efforts (including the building of the bomb) during World War II (and just possibly UFO research after Roswell).

Bush proposed a device he called the memex, a desk equipped with screens which could display documents from a built-in microfilm library. The memex would thus contain all of a person’s books, records, and letters, mechanized for fast and easy consultation. The memex would thus function a kind of personal memory extension. Furthermore, as the documents would be somehow mechanically linked according to the user’s own associations and keywords rather than a static index, his overall concept rather resembled a personal computer attached to Google. It would even have a voice-driven typewriter and cameras.

How links worked between documents remained vague, but the article electrified many young thinkers. One of these was Ted Nelson, who later invented both the concept and term of hypertext. However, he and his supporters groused that his vision would have had hyperlinks working both ways to provide context and prevent dead or broken links, unlike the one-way links HTML is based upon. But HTML is still a most useful invention anyway.

The father of the web, Tim Berners-Lee, was inspired by the idea of hypertext. He worked for CERN, the massive European nuclear research laboratory, and his problem was to get the atomic scientists to put their results online for others to see. He floated an initial proposal in 1989, but it took over five years to be approved and worked out.

The way scientific results were primarily shared at the time was through poster sessions at conferences, still the time-honored way kids’ science fair projects are displayed. Poster sessions carry brief descriptions of scientific experiments and their results mounted on boards. They have long titles, and are usually covered with charts, graphs, and photos. There’s nothing fancy or pretty at all about them; clarity is the sole aim.

So the web was invented to share science fair type exhibits. But the invention of the personal computer bestowed the means for anyone to take advantage of such a delivery system of rich visual information, not just scientists with their massive mainframes. The web and the PC made perfect companions – in fact, Berners-Lee’s first web-server and web browser were made on a NeXT workstation built by Steve Jobs after he left Apple.

Several things helped make HTML succeed. One was its simplicity – with a little familiarity even non-geeks could make sense of the code – and the other was that nobody owned it. HTML was shared with the world without ever being patented by Berners-Lee or CERN: open-source before that was even a thing. Probably not since Benjamin Franklin gave the world the lightning rod has a single act of generosity proven so beneficial to the human race. For his work, Tim Berners-Lee was knighted and remains a respected visionary who continues to labor to make his creation live up to his ideals.

Since nobody owned the web, everybody did. To be useful to the whole world, that meant its standards had to be universal, voluntarily agreed-upon rather than enforced by decree. This meant that the “browser wars” – the competition between rival web browsers in the early 2000s could not last forever. Some parties, like Microsoft, tried to foist their own proprietary versions of the standards to dominate their rivals, but all such efforts failed. Sooner or later, the winners and losers had to agree.

Anatomy of a webpage

In November 1995, HTML 2.0 was released – the first published standard – by 2000, it had evolved up past 4.0, which was widely used by competing browsers. It’s gone beyond version 5 now. Along the way, new tags and capabilities were added and some quietly disappeared – such as the universally-annoying tag that made type incessantly blink on and off.

Webpages started off pretty ugly and clunky. Any font could be specified in a webpage, but that font had to be installed on the surfer’s machine to be used. The basic problem is that the appearance of the webpage is totally dependent on the resources of the device it is viewed upon and the quirks and capabilities of the program that does it.

This has been the biggest design headache of HTML, one which continues to this day. This is one reason web designers charge so much. Trying to get the same look out of every possible viewing situation and browser has made for insanely complicated code, oftentimes using inelegant hacks, resulting in bland pages and occasional horrific messes. Not to mention a few burned-out coders and designers, too.

One of the most popular workarounds for a long time was using HTML tables to format webpages. Tables were intended to present regular arrays of data, but since each cell could be made a different size and hold graphics and even other cells, they were often stretched and squished to try to approximate a desired, usually complicated, layout. These problems have only been solved recently, partially through the use of the new “div” tag, which causes a section of the page to be treated as a single unit.

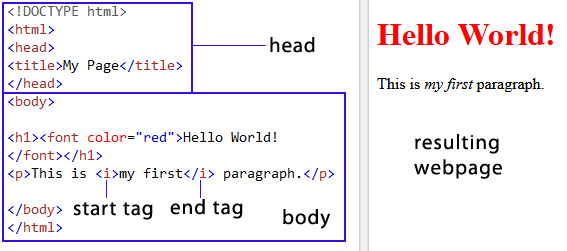

HTML code is very easy to recognize. Uniquely, all HTML tags are surrounded by angle brackets, that is, each tag starts with < and closes with >. These special characters are used by HTML to alert the web browser to the tag and not to display the tag itself. Almost all HTML tags come in two parts, starting and closing tags. A backslash (/) is included to show ending tags. Only the content visible between the twin tags is affected by them.

An HTML document and resulting webpage

So to display < as meaning “less than” in the content of a webpage, it also has to be written in special characters to make it actually visible on the page, in this case as <. Otherwise the browser will see the left angle bracket as a tag marker. Some tags are simple: “h1” is a headline, “p” indicates a paragraph, “i” for italic, “b” for bold and so on. Further complications come from nesting tags to apply multiply conditions to text, so there could be a bold headline with just the title of a book italicized in it. Others that describe placement or color effects or contain scripts can be much more complicated.

A webpage has lots of HTML scattered throughout, identifying one thing as a headline, another as a paragraph, making a word bold or italic, telling the page to place an image in one place and a link in another, and so on. None of the fonts, icons, and graphics, photos, or film clips that the page shows are actually part of the document, sometimes not even the text itself. HTML links fetch them all to be embedded in the right spots when the page is actually viewed. Ads or news feeds may be fetched from other servers as well.

Even so, there is a lot more information hidden in a webpage than is visible in the “body”, the section of the webpage that contains all the content. Above that is another section, invisible in browsers, the “head” which contains data about the document itself. This includes its title and basic instructions, plus much “meta” data about who wrote it, what it’s about, keywords, and so forth, which is often helpful to search engines.

HTML will keep evolving. It already has been adapted to many non-English languages and writing systems such as Chinese. Recent developments also include tags for formatting audible information for deaf people.

But to do its job effectively on all devices, much more than HTML is needed. HTML only provides a basic framework to the content. To employ flexible and elegant layouts for every screen and be truly interactive, style sheets and scripts are needed, too. These will be discussed in future articles in this series.